Adventures in AT&T DNS: Solving the Problem of a Meddling ISP.

Lately I've been troubleshooting various issues with my own network and server setup, but there's been one that I've been putting off... because it involves the ISP.

I hate dealing with ISP issues. They will never admit the problem starts with them, and in the end I always end up going down a rabbit hole trying to find workarounds to a problem they deny exists.

In this case, the problem involves DNS resolution. We all know how that conversation goes.

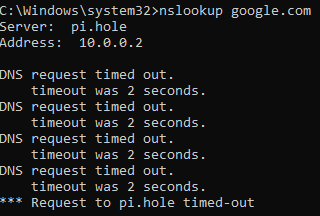

We are paying for AT&T gigabit fiber service, and for the most part, it delivers... there's just one niggling problem, and that is that DNS resolution can take up to 15-20 seconds. Not milliseconds. seconds. This of course means that all initial DNS requests time out, and web pages won't load, and services won't connect, until the local DNS forwarding server has cached the records and can respond instantly to further queries.

Searching online reveals a long history of people complaining about this problem, and AT&T refusing to acknowledge it, or taking it to DMs.

A few particular posts stand out, however, like this one from Jammrock5 on the AT&T Forums:

Today I turned up a dnscrypt proxy and forced all DNS connections to use TCP. Then I forwarded all my Internet DNS searches to the dnscrypt proxy so I have no outbound UDP DNS traffic. Low and behold, the Internet is blazing fast again. Stupidly fast in some cases. Pages load nearly instantly for well-provisioned sites (Google, Microsoft, Facebook, Amazon, Twitch, YouTube, etc.), which is what I expect from a Gb fiber ISP.

Which brings me back to this post:

https://forums.att.com/t5/AT-T-Fiber-Equipment/Gigapower-Youtube-Throttling/m-p/5096061#M2437

DNS, like the QUIC protocol, both use UDP as the transport protocol. It is fairly well documented that QUIC on AT&T is horrible because UDP is throttled/limited/shaped/something'ed which causes it to be unusable from time to time. It appears that the anti-UDP traffic shapers have started to bleed over and effect other UDP traffic including, quite possibly THE most important service protocol on the internet, DNS.

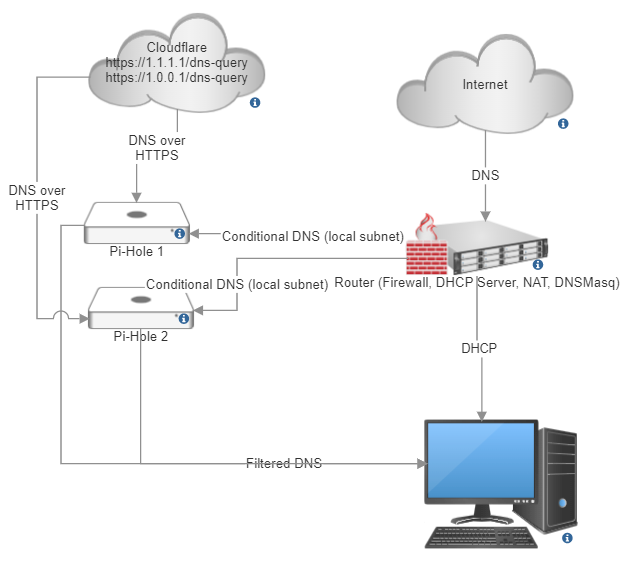

This of course gives me an idea: rather than fight AT&T to stop breaking my DNS traffic, what if I just implemented DNS over HTTPS? I already have DNS forwarding servers configured on my network in the form of Pi-Holes:

Implementing DNS over HTTPS (DoH) via Cloudflared on Pi-Hole

I am going to be following portions of this guide (archive mirror) to install on a 32 bit raspberry pi (other architectures are supported, but find the instructions specific to them in the original source material.)

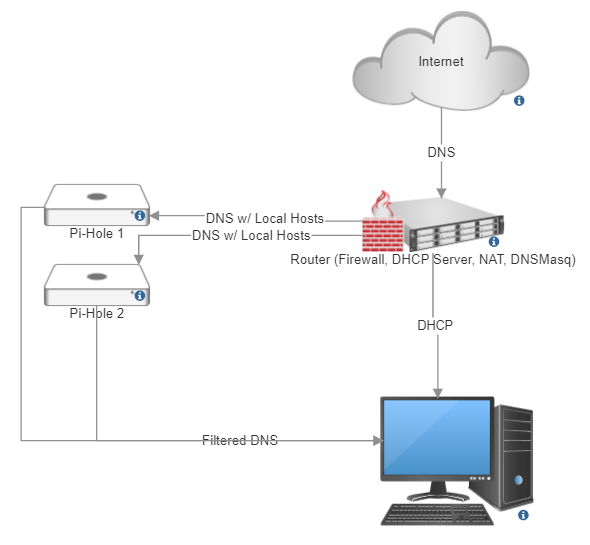

The first step is determining how we want this to work. Since I am hosting a local intranet and services on the LAN, I want internal clients to be able to receive hostnames and local addresses from the DHCP server (the router). Previously I did this by forwarding all DNS traffic through the router first, and then having the pi-holes serve the DNS they get from DNSMasq on the router.

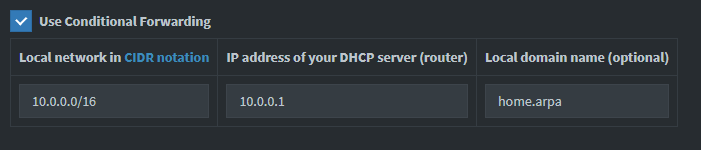

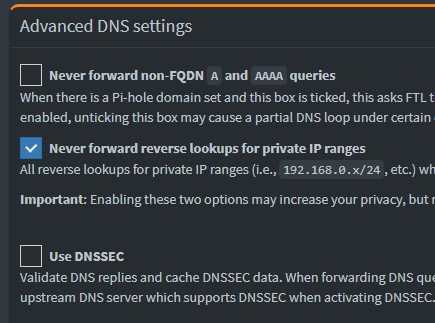

To minimize the hassle and complication of moving to DNS over HTTPS, we're going to cut the router out of the loop for all non-local traffic. We do this using conditional forwarding on the raspberry pi. Fortunately, Pi-Hole makes this easy, with a simple GUI for DNS configuration options including conditional forwarding.

With this done, we can now freely tamper with the upstream DNS on the pi-holes without affecting local name resolution, so we don't break anything on that end.

To avoid breaking anything on the upstream end, we are going to set up DoH on one pi-hole at a time, and remove the one we are configuring from the DNS pool on the DHCP server (so that we can test using manual DNS before giving it out via DHCP)

Now we install Cloulflared on the pi-holes by using SSH to connect to the device:

wget https://github.com/cloudflare/cloudflared/releases/latest/download/cloudflared-linux-arm

sudo mv -f ./cloudflared-linux-arm /usr/local/bin/cloudflared

sudo chmod +x /usr/local/bin/cloudflared

cloudflared -v

We are going to configure cloudflared to run on startup using a service account. First we create the user account.

sudo useradd -s /usr/sbin/nologin -r -M cloudflared

and create a configuration file

sudo nano /etc/default/cloudflared

# Commandline args for cloudflared, using Cloudflare DNS

CLOUDFLARED_OPTS=--port 5053 --upstream https://1.1.1.1/dns-query --upstream https://1.0.0.1/dns-query

Note: you can also use google's DNS server or quad9 which both support DoH as well:

--upstream https://8.8.8.8/dns-query --upstream https://9.9.9.9/dns-query

Next we are updating permissions on the configuration file and binary for use with the service account

sudo chown cloudflared:cloudflared /etc/default/cloudflared

sudo chown cloudflared:cloudflared /usr/local/bin/cloudflared

and create a systemd script to control running the service on startup

sudo nano /etc/systemd/system/cloudflared.service

[Unit]

Description=cloudflared DNS over HTTPS proxy

After=syslog.target network-online.target

[Service]

Type=simple

User=cloudflared

EnvironmentFile=/etc/default/cloudflared

ExecStart=/usr/local/bin/cloudflared proxy-dns $CLOUDFLARED_OPTS

Restart=on-failure

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

then we enable and launch the service

sudo systemctl enable cloudflared

sudo systemctl start cloudflared

sudo systemctl status cloudflared

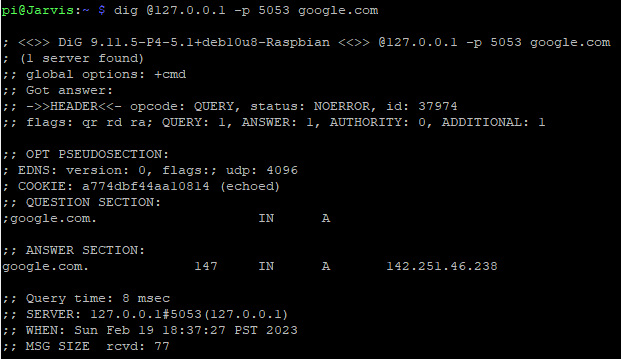

Finally, we can test that it is working using dig.

dig @127.0.0.1 -p 5053 google.com

and we should get an output that looks like this:

Success!

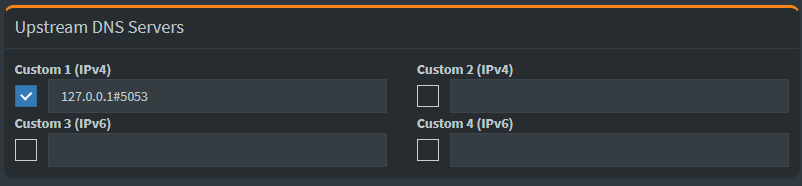

Now we simply direct the pi-hole to use the cloudflared service on port 5053 as the upstream DNS server instead of the router. This is also a simple change in the same GUI we used earlier:

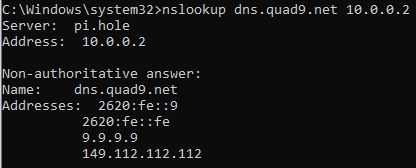

With this done we can test name resolution on a client using the pi-hole as a DNS server:

Now we simply repeat the procedure on the second pi-hole for redundancy, and then add both of them back to the DNS server list in the DHCP server settings on the router.

Our final configuration looks something like this:

To keep cloudflared up to date, you should periodically fetch and install the new binary:

wget https://github.com/cloudflare/cloudflared/releases/latest/download/cloudflared-linux-arm

sudo systemctl stop cloudflared

sudo mv -f ./cloudflared-linux-arm /usr/local/bin/cloudflared

sudo chmod +x /usr/local/bin/cloudflared

sudo systemctl start cloudflared

cloudflared -v

sudo systemctl status cloudflared

Final Notes

After changing the pi-hole configuration, I found it necessary to edit the resolv.conf file on the pi-hole at /etc/resolv.conf to use the nameserver 127.0.0.1 otherwise the adlist updates would fail to obtain DNS. I don't know why this problem only surfaced now, but to fix it:

sudo nano /etc/resolv.conf

# Generated by resolvconf

nameserver 127.0.0.1

That's all! The changes took only maybe half an hour total, so it was a good return for a major quality of life improvement.